Introduction: Why Methodology Matters in Data Projects

In today’s data-driven world, companies generate more information than ever before. Yet raw data alone is not valuable – it’s what you do with it that counts. That’s why frameworks like CRISP-DM (Cross-Industry Standard Process for Data Mining) are so important.

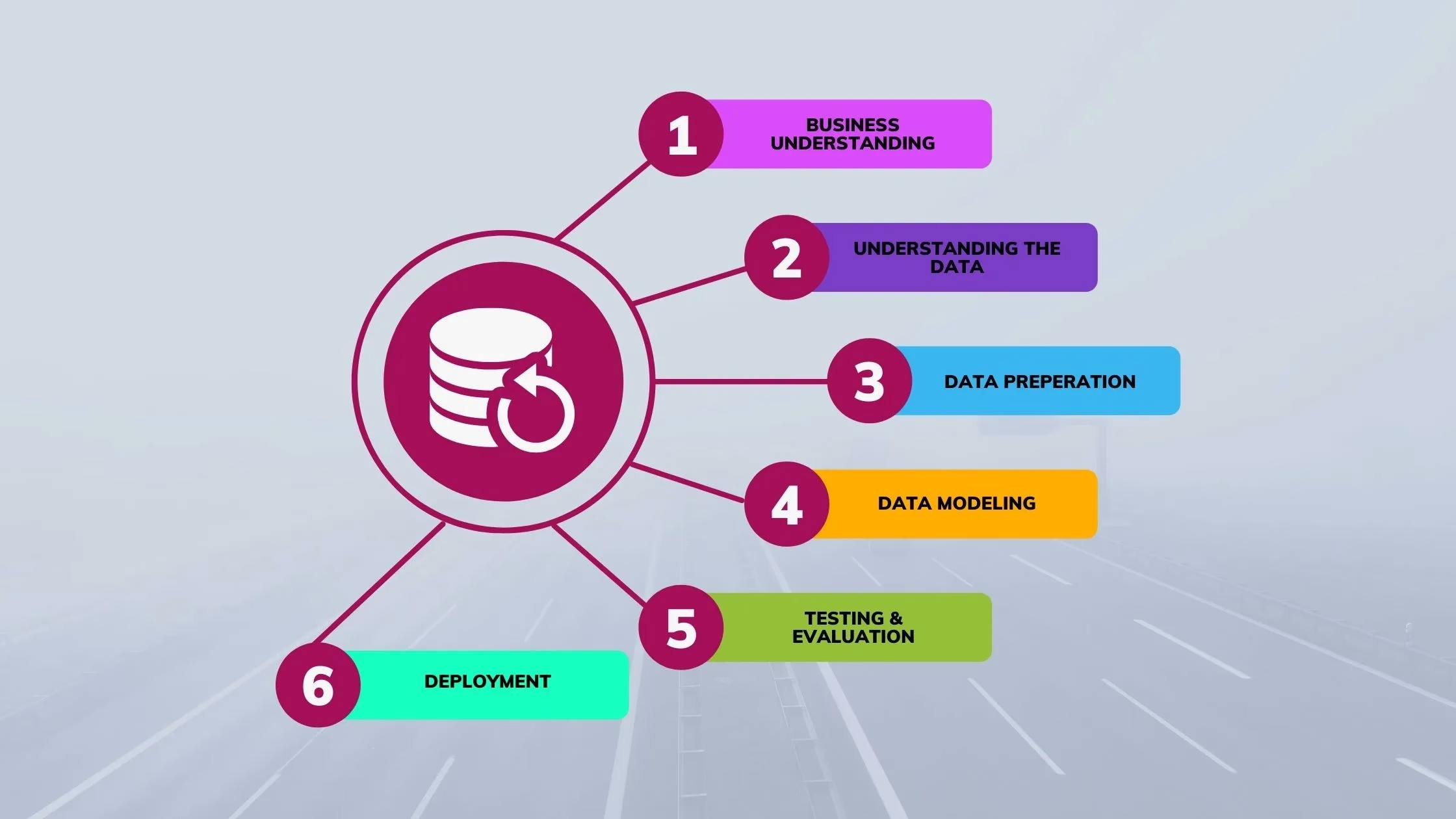

Whether you’re building a recommendation engine, cleaning messy customer records, or forecasting sales, a methodical approach ensures clarity, quality, and results. CRISP-DM remains one of the most widely adopted methodologies for structuring data analytics and mining workflows. Its major strength lies in its flexibility – you can return to previous phases as new insights emerge.

In this post, we’ll walk through the full CRISP-DM lifecycle, show how it ties into practical data cleaning, and explain how your business can benefit from using it consistently.

What Is CRISP-DM?

CRISP-DM stands for Cross-Industry Standard Process for Data Mining. Developed in the late 1990s by a consortium of industry leaders, it offers a clear, industry-agnostic roadmap for solving data problems.

CRISP-DM includes six core phases:

- Business Understanding

- Data Understanding

- Data Preparation

- Modeling

- Evaluation

- Deployment

Unlike linear methodologies, CRISP-DM encourages iteration. You can return to earlier phases as the project evolves, making it ideal for real-world, messy datasets and dynamic business questions.

Phase 1: Business Understanding

Every data project must start with a deep dive into the business context. Without clear objectives, even the best algorithms fall flat.

Key Questions to Ask:

- What problem are we solving?

- What decisions will this analysis support?

- Who are the stakeholders?

- What does success look like?

Outputs of This Phase:

- Project charter or brief

- Success metrics

- Business constraints (budget, timeline, tech)

At DieseinerData, we always begin client engagements with stakeholder interviews and a strategic goal-setting session to ensure alignment from day one.

Phase 2: Data Understanding

With the business problem in focus, the next step is exploring your available data. Think of this as detective work – before you clean or model, you must understand what you’re working with.

Activities Include:

- Data source identification (databases, APIs, flat files, etc.)

- Profiling the dataset: missing values, data types, ranges

- Exploratory Data Analysis (EDA)

- Identifying anomalies or inconsistencies

Tools Commonly Used:

- SQL

- Python (Pandas, Matplotlib, Seaborn)

- Power BI or Tableau for visual summaries

During this phase, it often becomes clear that significant cleaning and preparation are required – a common bottleneck we’ll address next.

Phase 3: Data Preparation

This is often the most time-consuming and error-prone phase in any data project. The goal is to take raw, messy inputs and convert them into clean, structured datasets ready for modeling or analysis.

Let’s walk through the sub-steps of effective data preparation:

1: Handle Missing Data

- Remove Rows/Columns: If the missing portion is small

- Impute Values: Use mean, median, or mode

- Predict Missing Data: Regression, KNN, or ML-based imputation

2: Standardize Formats

- Convert all dates to

YYYY-MM-DD - Normalize text fields (“USA” vs “United States”)

- Align units and decimals

3: Remove Duplicates

- Identify exact and near matches

- Use scripts or tools like

drop_duplicates()in Python or Excel’s built-in filters

4: Correct Errors

- Flag typos, inconsistent formats, or out-of-range values

- Apply validation rules or cross-reference against source data

5: Normalize and Transform

- Scaling: Normalize numerical data for modeling

- Encoding: Convert categories into numbers using one-hot encoding or label encoding

- Parsing: Split combined fields (like full names or addresses)

6: Validate and Document

- Perform spot-checks and generate summary statistics

- Document every change for reproducibility and audits

7: Automate When Possible

- Use Python pipelines, SQL stored procedures, or ETL tools like Talend, Alteryx, or Airbyte

- Schedule regular validation and data refresh routines

DieseinerData often builds automated pipelines for clients to ensure these steps run continuously with minimal manual intervention.

Phase 4: Modeling

With your data clean and structured, you can begin modeling. At this point, you apply algorithms to uncover insights, classify data, or make predictions.

Types of Models You Might Use:

- Regression: For predicting numeric outcomes (e.g., sales forecasting)

- Classification: For predicting categories (e.g., customer churn)

- Clustering: For grouping similar items (e.g., market segmentation)

- Recommendation Engines: Based on collaborative filtering or content similarity

Modeling is highly iterative. You may try several techniques and adjust parameters multiple times before you land on the best-performing version.

Phase 5: Evaluation

Once you’ve built one or more models, it’s time to assess their performance. But model accuracy isn’t the only metric that matters.

Consider the Following:

- Does the model solve the original business problem?

- How well does it generalize to new data?

- Are there biases or fairness issues?

You may discover that the model works technically, but doesn’t meet business needs. If so, it’s entirely appropriate to return to earlier phases and refine.

At DieseinerData, we don’t just optimize models for precision – we align them with business strategy, user experience, and operational feasibility.

Phase 6: Deployment

A successful model must move from the data science lab into the real world.

Deployment May Include:

- Integrating predictions into dashboards

- Embedding models in web apps or APIs

- Triggering automated workflows based on model output

- Publishing reports or scorecards for stakeholders

Deployment also includes monitoring. You’ll need to watch for model drift, where predictions become less accurate over time due to changing data.

At DieseinerData, we build robust deployment pipelines using modern DevOps practices and ensure maintainability through thorough documentation and version control.

Why CRISP-DM Works: It’s Not One-and-Done

A key strength of CRISP-DM is that it’s nonlinear. After deployment, feedback loops often lead back to earlier stages.

- Business goals evolve

- Data pipelines improve

- User behavior changes

- External conditions shift

In every case, the CRISP-DM framework allows your team to pivot intelligently and efficiently.

CRISP-DM in Action: Practical Example

Imagine a national retailer wants to reduce product return rates. Using CRISP-DM, the project unfolds as follows:

- Business Understanding: Reduce return rates by 15%

- Data Understanding: Analyze historical return data by product, customer, and channel

- Data Preparation: Clean missing return reasons, normalize product categories

- Modeling: Train a logistic regression model to predict high-risk purchases

- Evaluation: The model achieves 78% recall on a test set

- Deployment: Sales team receives alerts on high-risk transactions; marketing sends pre-emptive satisfaction surveys

Because the process was structured, repeatable, and measurable, the retailer quickly realized value – and improved customer satisfaction.

Conclusion: From Framework to Competitive Advantage

CRISP-DM isn’t just a theoretical model – it’s a practical, proven way to structure data projects that deliver impact. Whether you’re cleansing records, predicting sales, or automating reports, this methodology ensures consistency, transparency, and adaptability.

At DieseinerData, we’ve used CRISP-DM to help clients in retail, construction, and security modernize their analytics practices. We tailor each phase to your needs, automating where possible, validating everything, and aligning each step with your business goals.

Let’s Structure Your Data for Success

Is your data scattered, inconsistent, or underutilized? Don’t guess your way through analytics – follow a proven process.

Contact DieseinerData today to build a CRISP-DM-powered data strategy that delivers results.